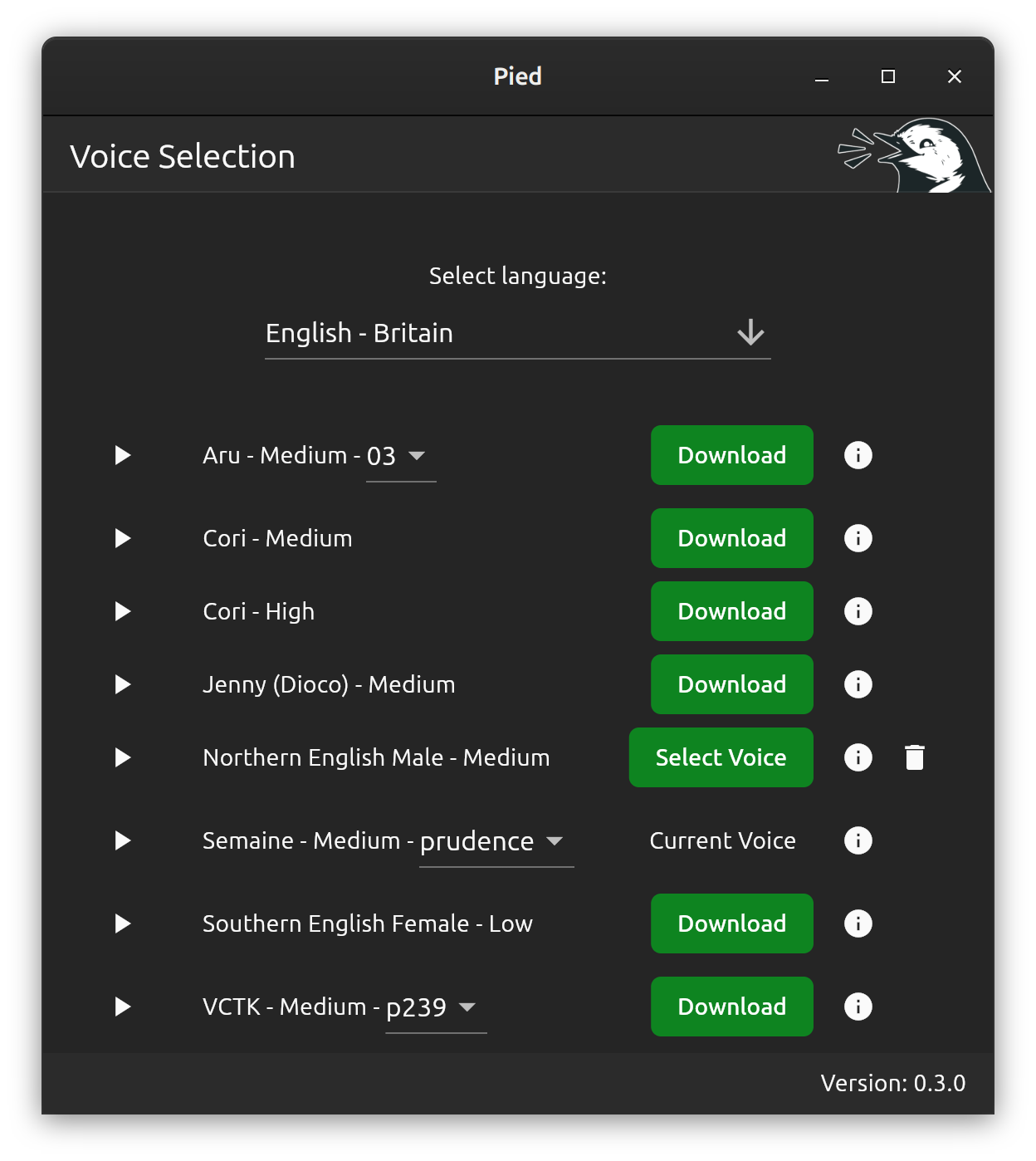

Recently Canonical have been working alongside Google to make it possible to write native Linux apps with Flutter. In this short tutorial, I’ll show you how you can render colour fonts, such as emoji, within your Flutter apps.

First we’ll create a simple application that attempts to display a few emoji:

import 'package:flutter/material.dart';

void main() {

runApp(EmojiApp());

}

class EmojiApp extends StatelessWidget {

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Emoji Demo',

theme: ThemeData(

primarySwatch: Colors.blue,

visualDensity: VisualDensity.adaptivePlatformDensity,

),

home: EmojiHomePage(title: '🹠Emoji Demo ðŸ¹'),

);

}

}

class EmojiHomePage extends StatefulWidget {

EmojiHomePage({Key key, this.title}) : super(key: key);

final String title;

@override

_EmojiHomePageState createState() => _EmojiHomePageState();

}

class _EmojiHomePageState extends State {

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text(

widget.title,

),

),

body: Center(

child: Text(

'🶠🈠ðŸ‡',

),

),

);

}

}

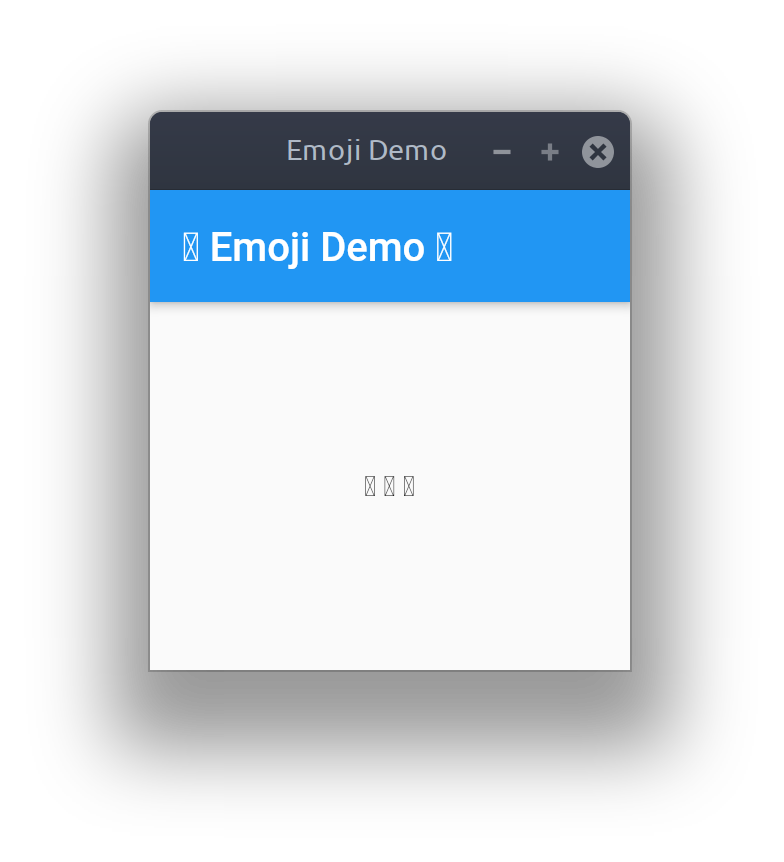

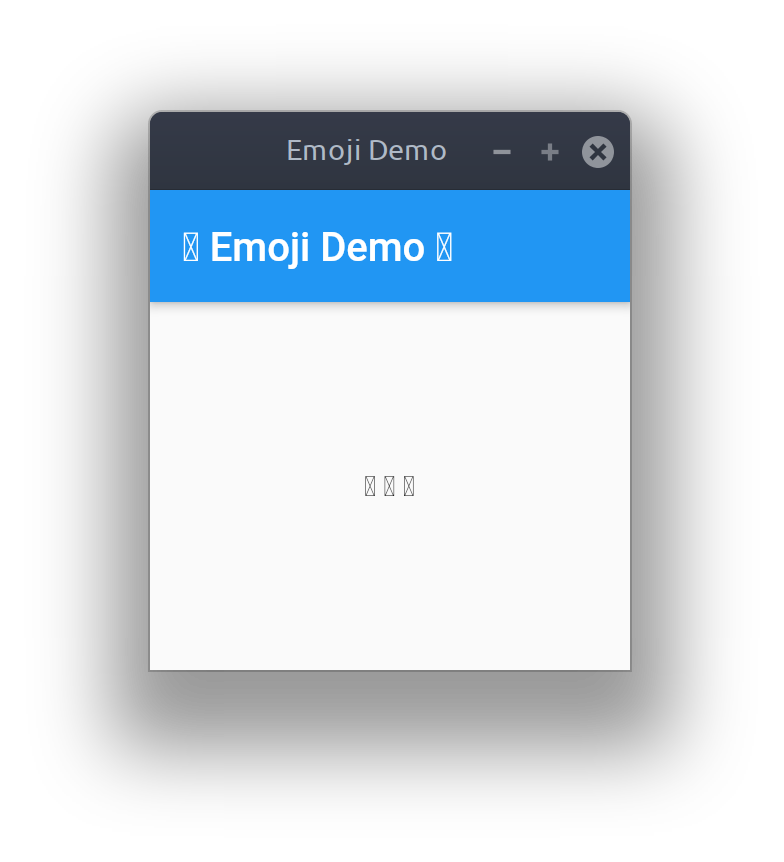

However, when we run it we find that our emoji characters aren’t rendering correctly:

For Flutter to be able to display colour fonts we need to explicitly bundle them with our application. We can do this by saving the emoji font we wish to use to our project directory, to keep things organised I’ve created a sub-directory called ‘fonts’ for this. Then we need to edit our ‘pubspec.yaml’ to include information about this font file:

name: emojiexample

description: An example of displaying emoji in Flutter apps

publish_to: 'none'

version: 1.0.0+1

environment:

sdk: ">=2.7.0 <3.0.0"

dependencies:

flutter:

sdk: flutter

dev_dependencies:

flutter_test:

sdk: flutter

flutter:

uses-material-design: true

fonts:

- family: EmojiOne

fonts:

- asset: fonts/emojione-android.ttf

I’m using the original EmojiOne font, which was released by Ranks.com under the Creative Commons Attribution 4.0 License.

Finally, we need to update our application code to specify the font family to use when rendering text:

class _EmojiHomePageState extends State {

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text(

widget.title,

style: TextStyle(fontFamily: 'EmojiOne'),

),

),

body: Center(

child: Text(

'🶠🈠ðŸ‡',

style: TextStyle(fontFamily: 'EmojiOne', fontSize: 32),

),

),

);

}

}

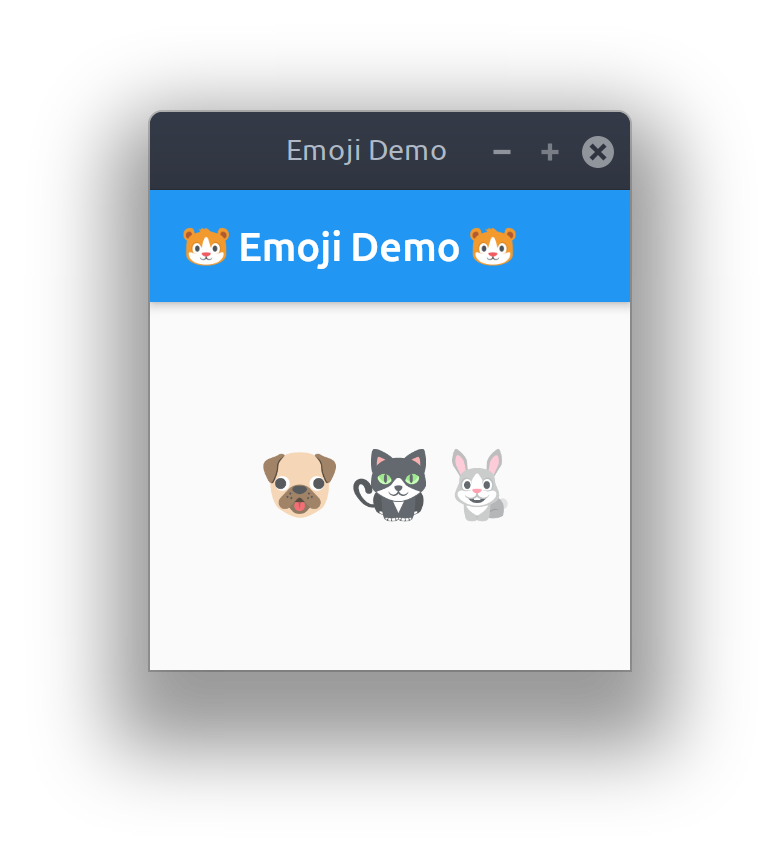

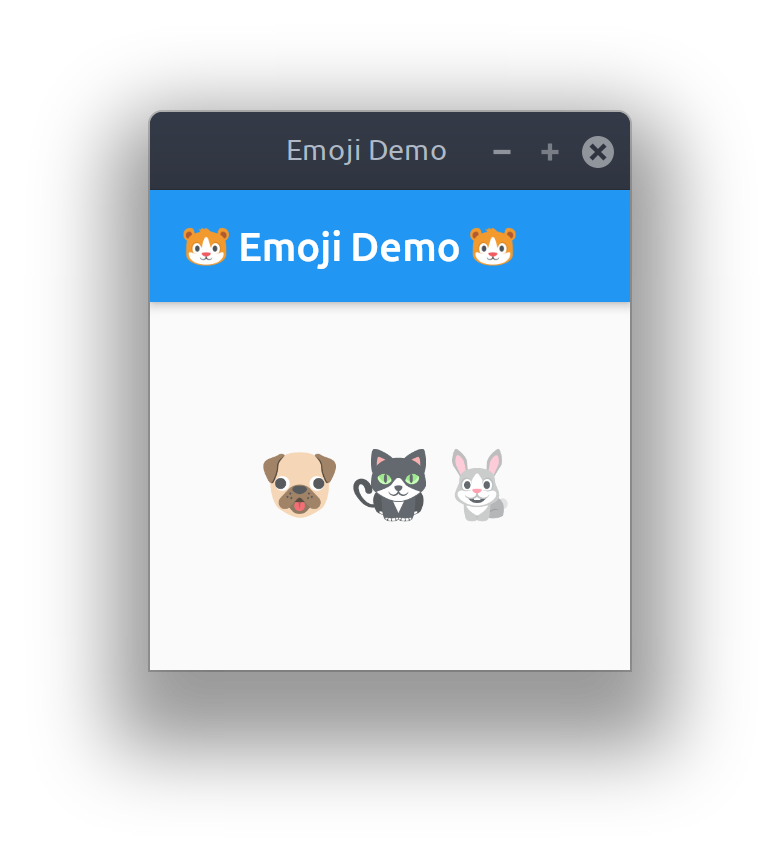

Now when we run our app our emoji are all rendered as expected:

The full source code for this example can be found here: https://github.com/Elleo/flutter-emojiexample